The replay clipper has been ported to

Replicate.com!

https://replicate.com/nelsonjchen/op-replay-clipper

Along with it comes a slew of upgrades and updates:

- GPU accelerated decoding, rendering, and encoding. NVIDIA GPUs are provided to the Replicate environment and greatly speed up the clipper.

- Rapid fast downloading of clips. Instead of relying on

replay to handle downloads sequentially in a non-parallel manner, we use the parfive library to download underlying data in parallel.

- Comma Connect URL Input. No need to mentally calculate the starting time and length. Just copy and paste the URL from Comma Connect.

- Video/UI-less mode. Don’t want UI? You can have it.

- 360 mode. Render 360 clips

- Forward Upon Wide. Render clips with the forward clip upon the wide clip

- Richer error messages to help pinpoint issues.

- No more having to manage GitHub Codespaces. Replicate handles all the setup and cleanup for you.

Unfortunately, there is a cost. It’s a very small cost but technically Replicate.com is not free. Expect to drop a cent per render. Thankfully, you have a lot a trial credits to burn through and the clipper can run on a free-ish tier.

GitHub Link: https://github.com/nelsonjchen/Colorized-Project

On IntelliJ IDEs, I’ve been using

Project-Color to set a color for each project. This is useful for me because I have a lot of projects open at once, and it’s nice to be able to quickly identify which project is which.

Unfortunately, Project-Color

hasn’t been updated in a while, and it doesn’t work with the latest versions of IntelliJ.

After a few months of using IntelliJ without Project-Color, I decided to try to fix it. I’ve released the result as

Colorized-Project.

Thre are a few rough edges, but it’s mostly functional. I’ve been using it for a few weeks now, and it’s been working well. I’ve also submitted it to the JetBrains plugin repository as well.

It still needs some polish like a nicer icon, and I’d like to add a few more features.

It’s been near a year since I supercharged

GitHub Wiki SEE with dynamically generating sitemaps.

Since then a few things have changed:

- GitHub has started to permit a select set of wikis to be indexed.

- They have not budged on letting un-publically editable wikis be indexed.

- There is now a star requirement of about 500, and it appears to be steadily decreasing.

- Many projects have reacted by moving or configuring their wikis to indexable platforms.

As a result, traffic to

GitHub Wiki SEE has dropped off dramatically.

This is a good thing, as it means that GitHub is moving in the right direction.

I’m still going to keep the service up, as it’s still useful for wikis that are not yet indexed and there are still about 400,000 wikis that aren’t indexed.

Hopefully GitHub will continue to move in the right direction and allow all wikis to be indexed.

End to End Longitudinal Control is currently an “extremely alpha” feature in Openpilot that is the gateway to future functionality such as stopping for traffic lights and stop signs.

Problem is, it’s hard to describe its current deficiencies without a video.

So I made a tool to help make it easier to share clips of this functionality with a view into what Openpilot is seeing, and thinking.

https://github.com/nelsonjchen/op-replay-clipper/

It’s a bit heavy in resource use though. I was thinking of making it into a web service but I simply do not have enough time. So I made instructions for others to run it on services like DigitalOcean, where it is cheap.

It is composed of a shell script and a Docker setup that fires up a bunch of processes and then kills them all when done.

Hopefully this leads to more interesting clips being shared, and more feedback on the models that comma.ai can use.

There’s a web browser version of datasette called datasette-lite which runs on Python ported to WASM with Pyodide which can load SQLite databases.

I grafted the enhanced lazyFile implementation from emscripten and then from this implementation to datasette-lite relatively recently as a curious test. Threw in a 18GB CSV from CA’s unclaimed property records here

https://www.sco.ca.gov/upd_download_property_records.html

into a FTS5 Sqlite Database which came out to about 28GB after processing:

POC, non-merging Log/Draft PR for the hack:

https://github.com/simonw/datasette-lite/pull/49

You can run queries through to datasette-lite if you URL hack into it and just get to the query dialog, browsing is kind of a dud at the moment since datasette runs a count(*) which downloads everything.

Elon Musk’s CA Unclaimed Property

Still, not bad for a $0.42/mo hostable cached CDN’d read-only database. It’s on Cloudflare R2, so there’s no BW costs.

Celebrity gawking is one thing, but the real, useful thing that this can do is search by address. If you aren’t sure of the names, such as people having multiple names or nicknames, you can search by address and get a list of properties at a location. This is one thing that the

California Unclaimed Property site can’t do.

I am thinking of making this more proper when R2 introduces lifecycle rules to delete old dumps. I could automate the process of dumping with GitHub Actions but I would like R2 to handle cleanup.

Finally released GTR or Gargantuan Takeout Rocket.

https://github.com/nelsonjchen/gtr

Gargantuan Takeout Rocket (GTR) is a toolkit of guides and software to help you take out your data from Google Takeout and put it somewhere else safe easily, periodically, and fast to make it easy to do the right thing of backing up your Google account and related services such as your YouTube account or Google Photos periodically.

It took a lot of time to research and to workaround the issues I found in Azure.

I also needed to take apart browser extensions and implement my own browser extension to facilitate the transfers.

Cloudflare workers was also used to work around issues with Azure’s API.

All this combined, I was able to takeout 1.25TB of data in 3 minutes.

Now I’m showing it around on Twitter, Discord, Reddit, and more to users who have used VPSes to backup Google Takeout or have expressed dismay at the lack of options for users who are looking to store archives on the cheap. The response from people who’ve opted to stay on Google has been good!

I built https://cellshield.info to scratch an itch. Why can’t I embed a

spreadsheet cell?

You can click on that badge and see the sheet that is the data backing the

badge.

It is useful for embedding a cell from an informal database that is a Google

Spreadsheet atop of some README, wiki, forum post, or web page. Spreadsheets are

still how many projects are managed and this can be used to embed a small

preview of important or attention grabbing data.

It is a simple Go server that uses Google’s API to output JSON that

https://shields.io can read. Mix it in with some simple VueJS generator

front-end to take public spreadsheet URLS and tada.

I picked to base my implementation on shields.io’s as they make really pretty

badges and have a lot of nice documentation and options.

I run it on Cloud Run to keep the costs low and availability high. It also

utilizes Google’s implementation of authorization to get access to the Sheets API.

The badge generator web UI can also make BBCode for embedding (along with modern

Markdown and so on).

I’ve already used it for

this

and

that. The former outputs a

running bounty total and the latter is community annotation progress.

A major Game Boy development contest is using it labels to display the prize

pool amount: https://itch.io/jam/gbcompo21

Why I built this?

I built it for the

Comma10K

annotation project. I contributed the original badge which attempted to use a

script that was used in the repo to analyze the completion progress.

Unfortunately, this kept on diverging from the actual progress because the human

elements kept changing the structure and organization and I had the badge

removed. The only truth is what was on the spreadsheet.

Popularity

Compared to my other projects, I don’t think the popularity will be too good for

this project though. I think it might have a chance to spread via word of mouth

but it’s not everyday that a large public collaborative project is born with a

Google Spreadsheet managing it. The name isn’t that great either as it probably

conflicts with anti-wireless hysteria. Also, it’s not like there’s an agreed

upon community of Google Spreadsheet users to share this knowledge with.

I also shamelessly plugged it here but I think the question asker might be

deceased:

https://stackoverflow.com/questions/57962813/how-to-embed-a-single-google-sheets-cells-text-content-into-a-web-page/68505801#68505801

At least it was viewed 2,000 times over the last two years.

Maintenance

It’s on Cloud Run. The cost to keep it running is low as it sometimes isn’t even

running and the Sheets V4 API it uses was recently declared

“enterprise-stable”.

Because of this, it will be super easy to maintain and cheap to run. I don’t

expect any more than $2 a month.

I built this in March, but I figure I’ll write about this project better late

than never. I supercharged it in June out of curiosity to see how it would

perform. It’s just a ramble. I am not a good writer but I just wanted to write

stuff down.

TLDR: I mirrored all of GitHub’s wiki with my own service to get the content on

Google and other search engines.

If you’ve used a search engine to search up something that could appear on

GitHub, you are using my service.

https://github-wiki-see.page/

Did you know that GitHub wikis aren’t search engine optimized? In fact, GitHub

excluded their own wiki pages from the search results with their

robots.txt.

“robots.txt” is the mechanism that sites can use to indicate to search engines whether or not to index certain sections of the site.

If you search on Google or Bing, you won’t find any results unless your search

query terms are directly inside the URL.

The situation with search engines is currently this. For example, if you wanted

to search for information relating to Nissan Leaf support of the openpilot

self-driving car project in their wiki, you could search for “nissan leaf

openpilot wiki”. Unfortunately, the search results would be empty and would

contain no results. If you searched for “nissan openpilot wiki”,

“https://github.com/commaai/openpilot/wiki/Nissan" would show up correctly

because it has all the terms in it. The content of the GitHub wiki page is not

used for returning good results.

It has been like this for at least 9 years.

GitHub responded that they have declined to remove it from robots.txt as they believe it can be used for SEO abuse.

I find this quite unbelievable.

The mechanism to prevent this is to utilize rel="nofollow" on all user generated <a> links in the content and with newer guidance suggesting rel="nofollow ugc".

By removing the content from the index, they have blinded a large portion of the

web’s users to the content in GitHub wiki pages.

Until I made a pull request to

add a notice to their documentation that wikis are not search engine optimized,

there was absolutely no official documentation or notice about this decision. I

still believe it is not enough as nothing in the main GitHub interface notes

this limitation.

I believe there are many dedicated users and communities that are not aware of

this issue who are diligently contributing to GitHub wikis. This really

frustrated me. I was one of them. Many others and I contributed a lot to the

[comma.ai openpilot wiki][op_wiki] and it was maddening that their work and my

work were not indexed.

I noticed that a lot of technical questions had Stack Overflow mirrors rank

highly and yet they weren’t Stack Overflow.

So what I did was this: I made a proxy site without a robots.txt that would

mirror GitHub wiki content to a site that did not have a restrictive robots.txt.

I put it up at https://github-wiki-see.rs. I called it GitHub Wiki SEE for

GitHub Wiki Search Engine Enablement. It’s kind of a twisted wording of SEO as I

see it as enabling and not even “optimizing”.

What’s the technology behind it?

The current iteration is a

Rust Actix Web application. It parses

the URL and makes requests out to GitHub to get the content. It then scrapes the

content for relevant HTML and reformats it to be more easily crawl-able. It then

serves the content to the search engine and users who come in from a search

engine with a link to the project page and reasoning at the bottom as a static

pinned element. Users aren’t meant to read that page and are only meant to

browse to the original GitHub page.

With these choices, I could meet these requirements:

- Hosted it on Google Cloud Run just in case it doesn’t become popular.

- Made it easy to deploy.

- Made it cheap.

- Made it small to run on Cloud Run.

- Made it low maintenance.

Another considering was that it needed to not get rate limited by GitHub. To do

this, I made the server return an error and quit itself on Cloud Run if it

detected a rate limiting response to cycle to a new server with a new client IP

address. Unfortunately, this limited the number of requests each server could

handle at a time to a minimum of one.

Supercharging it

I originally built the project in March. I monitored the

Google Search Console like a hawk to see how the openpilot stuff I had

contributed to was responding to the proxy setup. Admittedly, it got a few users

to click through here and there and I was pretty happy. For a month or two, I

was pretty satisfied. However, I kept on thinking about the

rest of the users who lamented this situation. While some did take

my offer to post a link somewhere on their blog, README, or tweet, the adoption

wasn’t as much as I had hoped.

I had learned about

GH Archive which is a project that archived

every event of GitHub since about 2012. I also learned that the project also

mirrors its archive on

BigQuery. BigQuery allows you to search large

datasets with SQL. Using BigQuery, I was able to determine that about 2,500

GitHub Wiki pages get edited every day. In a week, about 17,000. A month? 66,000

pages. My furor at the blocking of indexing increased.

I decided to throw $45 at the issue and run a big mega-query and queried all of

GH Archive. Over the lifetime of GH Archive, 4 million pages were edited. I

dumped this into a queue and processed it with Google Cloud Functions to run the

HEAD query on all the pages in the list and re-exported it to BigQuery. 2

million pages were still there.

You can find the results of this in these public tables on

BigQuery:

github-wiki-see:show.checked_touched_wiki_pages_upto_202106

github-wiki-see:show.touched_wiki_pages_upto_202106

With this checked_touched_wiki_pages_upto_202106 table, I generated 50MB of

compressed XML sitemaps for the GitHub Wiki SEE pages. I called them seed

sitemaps. The source for this can be found

in the sitemap generator repo.

BigQuery is expensive, so I made another sitemap series for continuous updates.

An earlier version used BigQuery, but the new version runs a daily GitHub

Actions job that downloads the last week of events from GH Archive, processes

them for unique Wiki URLs and exports them to a sitemap. This handles any

ongoing GitHub Wiki changes. The source for this can also be found

in the sitemap generator repo.

The results

I am getting thousands of clicks and millions of impressions every day. GitHub

Wiki SEE results are never at the top and average a position of about 29 or the

third page. The mirrored may not rank high and I don’t care as the original

content was never going to rank high anyway so the content appearing at all in

the search results of thousands of users even if it was sort of deep satisfies

me.

All in all, a pretty fun project. I am really happy with the results.

- My contributions to the OpenPilot wiki are now indexed.

- Everyone else’s contributions to their wikis are now indexed as well.

- I learned a few things or two about writing a Rust web app.

- I learned a lot about SEO

- I feel I’ve helped a lot of people who need to find a diamond in the rough and

the diamond isn’t in the URL.

- I feel a little warm that I have a high traffic site.

I have no intention of putting ads on the site. It costs me about $25 a month to

run but for the high traffic it gets, the peace of mind I’ve acquired, and the

good feelings of helping everybody searching Google, it is worth it.

I did add decommissioning steps. If it comes to it, I’ll make the site just

directly redirect but after GitHub takes Wikis off their robots.txt.

Things I Learned

This is a grab-bag of stuff I learned along the way.

- Sitemaps are a great way to get search engine traffic to your site.

- Large, multi-million page sitemaps can take many months to process. Consider

yourself lucky if it parses 50,000 URLs a day.

- The Google Search Console saying “failed to retrieve” is a lie. It means to

say “processing” but this is apparently a long standing stupid bug.

- GoogleBot is much more aggressive than Bing.

- Google’s Index of my proxy site is probably a lot larger than Bing’s by a

magnitude or two. For every 30, 40, or even 100 GoogleBot requests, I get

one Bing request.

- This is important for serving DuckDuckGo users as it is their index.

- The Rust Tokio situation is a bit of a pain as I am stuck on a version of

Tokio that is needed for stable Actix-Web. As a result, I cannot use modern

Tokio stuff that modern Rust crates or libraries use. I may port it to Rocket

or something in the future.

- A lot of people search for certification answers and test answers on GitHub

Wikis.

- GitHub Wikis and GitHub are no exception to Rule 34.

- Cloud Run is great for getting many IP addresses to use and to swap out if any

die. The outgoing IP address is extremely ephemeral and changing it is a

simple matter of simply exiting if a rate limit is detected.

- This might not always work. Just exit again if so.

- GCP’s UI is nicer for manual use compared to AWS.

- The built-in logging capabilities and sink of GCP products are also really

nice. I can easily re-export and/or stream logs to BigQuery or to a Pub Sub

topic for later analysis. I can also just use the viewer on the UI to see what

is going on.

- I get fairly free monitoring and graphing from the GCP ecosystem. The default

graphs are very useful.

- Rust web apps are really expensive to compile on Google Cloud Build. I had to

use 32-core machines to get it to compile in underneath the default timeout of

10 minutes. I eventually sped it up and made it cheaper to build by building

on a pre-built image along with a smaller instance but it is still quite

expensive.

- The traffic from the aggressive GoogleBot is impressively large. Thankfully, I

do not have to pay the bandwidth costs.

- My sloppy use of the HTML Parser may be causing errors but RAM is fairly

generous. It’s Rust too so I don’t really have to worry that much about

garbage collection or anything similar.

- Google actually follows links it sees in the pages. When I started, it did

not. Maybe it does it only on more popular pages.

- The Rust code is really sloppy, but it still works.

With the pandemic happening, it’s been tough for many organizations to adapt. We’re all supposed to be together! One way organizations have been staying together is by using Zoom Meetings.

At work, we’ve been using a knock-off reseller version called RingCentral Meetings. From looking at their competitors, Zoom has pulled out all the stops to make their meeting experience the most efficient, resiliant, rather cheapest, and reliable experience.

One of these features is “Breakout Rooms”. With breakout rooms, users can subdivide their meetings to make mini-meetings from a bigger meeting. For most of the year, there has been an odd restriction on Breakout Meetings though.

You cannot go to another breakout room as an attendee without the host reassigning you unless you are a Host or a Co-Host.

Obviously, this can result in a lot of load upon the poor user who is desginated the host. Even the Co-Hosts can’t assign users to another breakout room.

So I made a bot:

https://github.com/nelsonjchen/BreakoutRoomsBotForZoomMeetings

It’s quite a hack, but it basically controls a web client that is a Host and assigns users based on chat commands and on attendees renaming themselves.

Last night, I just finished finally optimizing the bot. It should be able to handle hundreds of users and piles of chat commands.

This morning I learned that Zoom will add native support for switching:

https://www.reddit.com/r/Zoom/comments/irkm82/selfselect_breakout_room/

It was fun, but as brief as the bot existed, the main purposes are numbered. Maybe someone can reuse the code to make other Zoom Meeting add-on/bots?

Technical and Learnings

-

I knew about this issue for many months but I never made a bot because there wasn’t an API. I waited until I was very disatisified with the situation and then made the bot. I should have just done it, without the API.

-

Thankfully, by doing this quickly, I only really spent like a week of time on this total. With some of the tooling I used, I didn’t have to spend too long debugging the issues.

-

The original implementation of this tool was a copy and paste into Chrome’s Console. This is a much worse experience for users than a Chrome extension.

Kyle Husmann contributed a fork that extensionized the bot and it is totally something I’ll be stealing for future hacks like this.

-

Zoom got to where they are by doing things their competitors did not and rather quickly. This feature/feedback response is great and is why they’ve been crushing. I feel this is a feature that would have taken their competitors possibly a year to implement. At this time, Microsoft Teams is also looking to implement Breakout Rooms.

-

Zoom’s web client uses React+Redux. They also use the Redux-Thunk middleware. They do stick some side-effects in places where things shouldn’t but whatever, it works. It did make integration very hard if not impossible at this layer for my bot. However, subscribing to state changes was and is very reliable.

-

RxJS is a nice implementation of the Reactive Pattern with Observables and stuff like with RX.NET. Without this, I could not fit the bot in so few lines of code with the reliability and usability that it currently has.

-

With

RxJS and Redux’s store, I was able to subscribe to internal Redux state changes and transform them into streams of events. For example, the bot transform the streams of renames and chat message requests into a common structure that it then merges to be processed by later operators. Lots of code is shared and I’m able to rearrange and transform at will.

-

The local override functionality is great if you want to inject Redux Web Tools into an existing minified application. If you beautify the code, you can inject dev tools too. Here’s the line of code I replaced to inject Redux Dev Tools into Zoom’s web client.

Previous:

, s = Object(r.compose)(Object(r.applyMiddleware)(i.a));

After:

, s = window.__REDUX_DEVTOOLS_EXTENSION_COMPOSE__(Object(r.applyMiddleware)(i.a));

This was super helpful in seeing how the Redux state changes when performing actions in a nice GUI.

-

fuzzysort is great! I’m quite surprised at how unsurprising the results can be when looking up rooms by a partial or cut up name.

-

The underlying websocket connection is available globally as commandSocket. If you observe the commands, they are just JSON and you can inject your own commands to control the meeting programatically.

-

Since Breakout Room status is sent back via WebSocket, the UI will automatically update. I did not have to go through the Redux store to update the Breakout Room UI.

-

UI Automation of Breakout Room UI: ~100ms vs webSocket command: 2ms. Wow!

-

Chat messages are encrypted and decrypted client-side before going out on the Websocket interface. This probably doesn’t stop Zoom from reading the chat messages since they are the ones who gave you the keys. I could have reimplemented the encryption in the bot but after running some replay attacks, the UI did not update. Without the UI updating, I didn’t feel it was safe for the host to not know what the bot sent out on their behalf.

-

Even if I had implemented the chat message encryption, the existing UI automation of chat messages hovered around 30ms. The profiler also showed that the overhead of automation wasn’t that much and much of the chat message encryption contributed to the 30ms. It wouldn’t have been much of a performance win if I implemented it myself.

-

Possible Security-ish Issue - Since it isn’t an acceptable bounty issue, and hosts can simply disable chat, I will disclose is possible to DOS the native client by simply spamming chat. If there is too much text, the native clients will lock up and require a restart. Native clients don’t drop old chat logs. Web clients don’t have this issue as they will drop old chat automatically. Last but not least, it is possible for the Host to disable chat in many ways to alleviate such an attack. I wasn’t sure if to let my load_test bot out there or not because of this but after reflection, I think it’s OK.

-

RxJS is MVP. Or rather the Reactive stuff is MVP. If you can get it into a stream, it’s super powerful. Best yet, you can reuse your experience from other ecosystems. I was previously using Rx.NET for some stuff at work. I’ll be definitely looking at using the Python version of this in the future.

- Though it did seem the differing Reactive stuff have different operators. The baselines are still great though and you can always port operators from one to another implementation.

-

Rx is really helped if you can use TypeScript. Unfortuntately, I never got to this stage.

That said, this was fun! Now onward to the next thing.

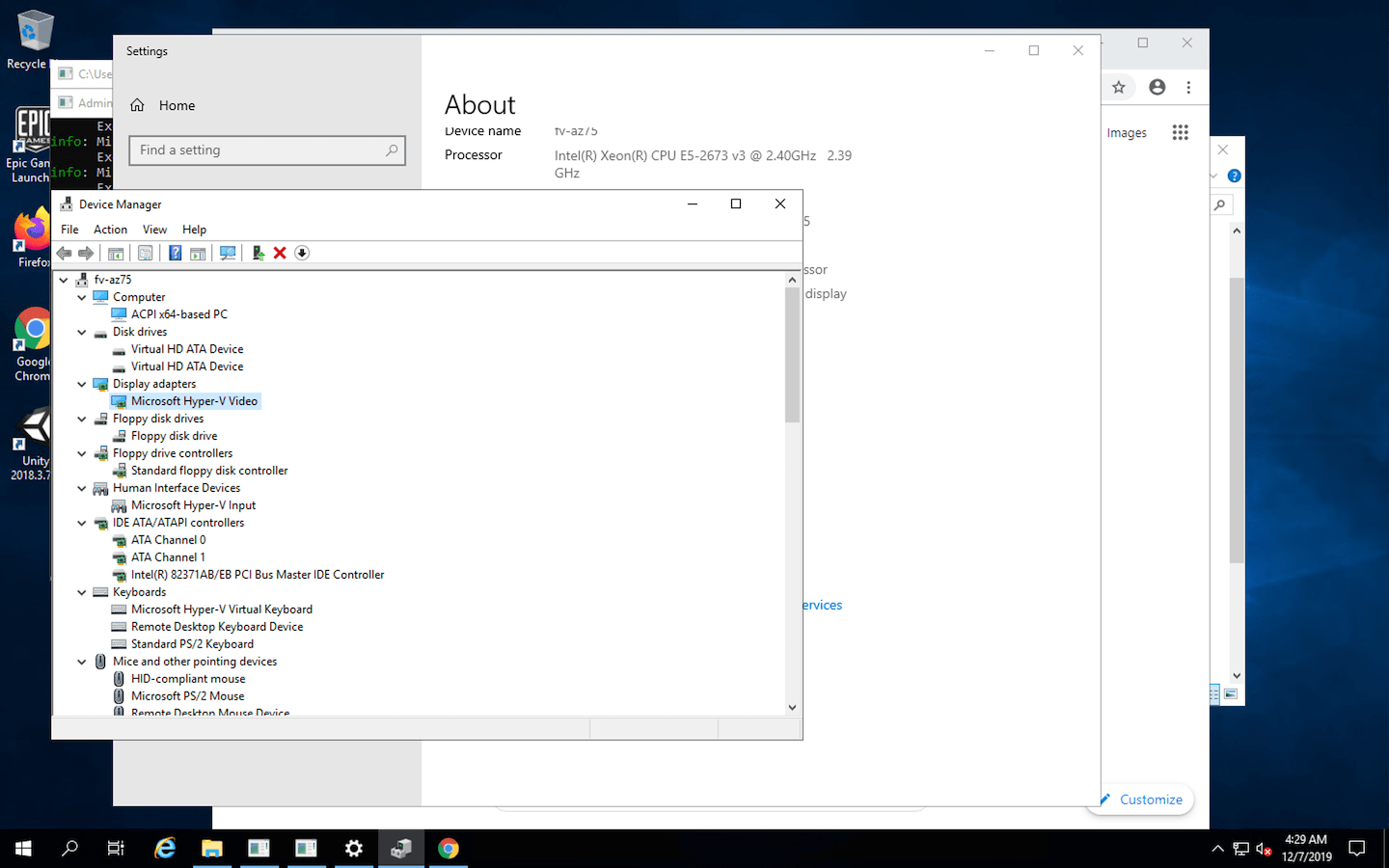

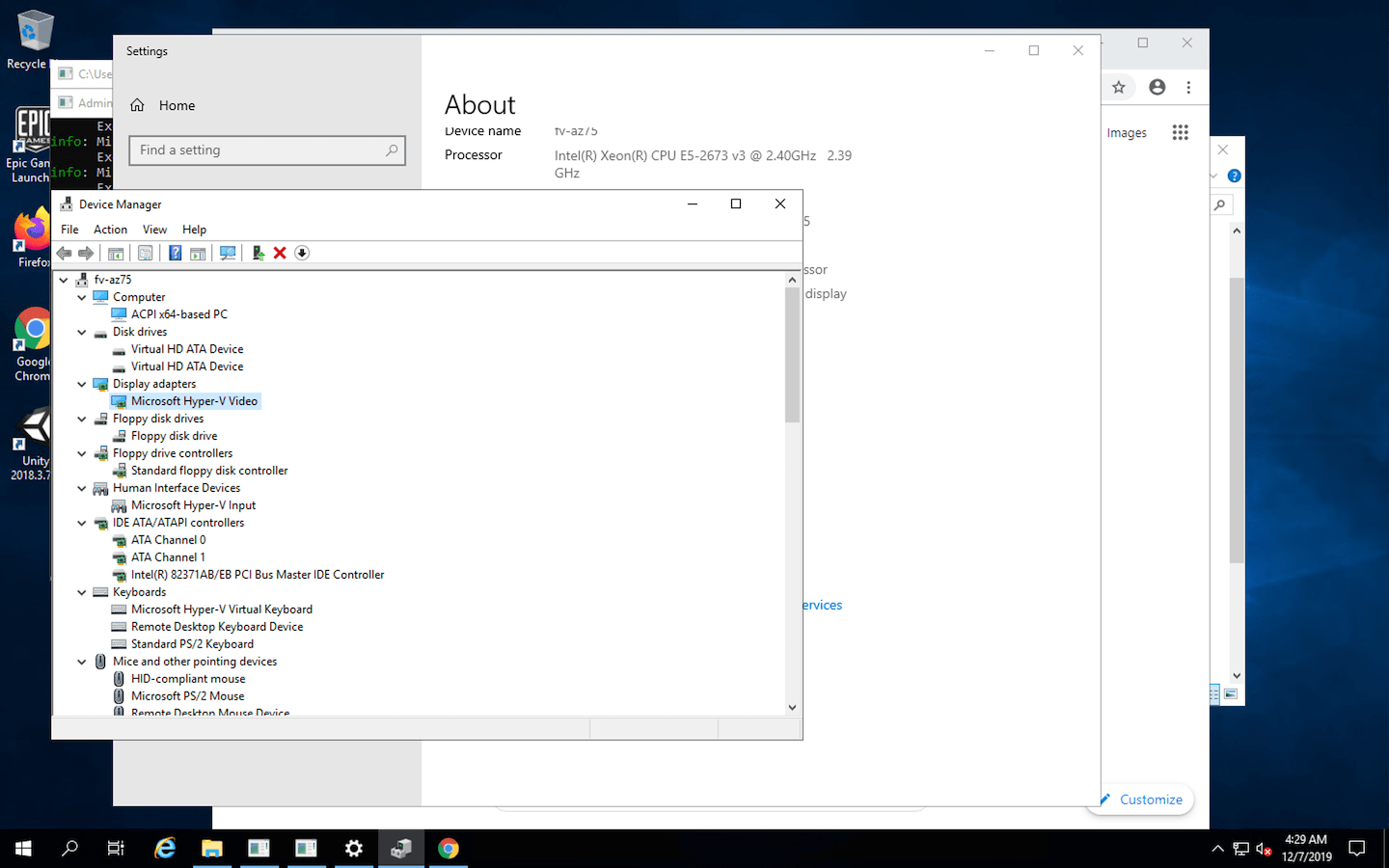

Ever wonder what the Desktop of the Windows Runners on GitHub Actions looks like?

Or perhaps you’re missing the ability to

RDP into build agents like on Appveyor.

I wrote some steps that use ngrok to make a reverse tunnel possible. I also turned on RDP if it wasn’t on already and set a password.

Take a look here!

https://github.com/nelsonjchen/reverse-rdp-windows-github-actions